Scaling Laws & The Scaling Hypothesis

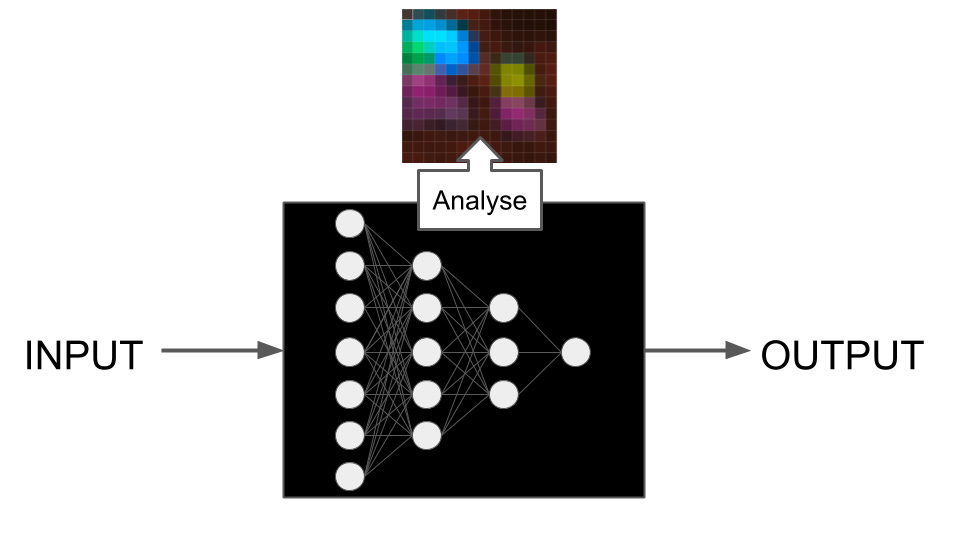

What is the Scaling Hypothesis?

The idea that as you scale three key elements linearly, intelligence emerges exponentially:

- Bigger networks (more parameters)

- Bigger data (more training examples)

- Bigger compute (more processing power)

"The models just want to learn... If you optimize them in the right way, they just want to solve the problem regardless of what the problem is. So get out of their way."

— Dario Amodei, referencing insights from Ilya Sutskever

Empirical Observations

Scaling laws are not fundamental laws of physics, but empirical regularities that have consistently predicted AI progress. As model size, data, and compute have increased, capability improvements have followed predictable patterns.

Timeline Predictions

"If you extrapolate the curves... it does make you think that we'll get to [powerful AI] by 2026 or 2027. I think there are still worlds where it doesn't happen in 100 years, but the number of those worlds is rapidly decreasing."

— Dario Amodei